From sandbox validation to enterprise-grade stability under hyper-growth.

TL; DR: In one month, we turned a rapidly growing deployment into a stable production-ready Private Cloud cluster. After stress testing, fine-tuning, and extending test coverage with custom scripts, the system went live – and stayed stable when it mattered most

What starts as a low-risk validation project can rapidly evolve into a critical operation. But what happens when a pilot scales ten times in record time? At that stage, businesses face a major dilemma: how to create a reliable and fault-tolerant platform for their users without falling into the infrastructure trap. The customer’s focus should be on the product, not building DevOps departments, managing server architecture, or overseeing complex maintenance cycles.

This is where the ThingsBoard Private Cloud platform steps in.

The challenge: when standard plans are not enough

Fast-growing IoT projects often present requirements that differ significantly from standard telemetry use cases:

- Massive persistent connectivity: constant active connections for hundreds of thousands of devices.

- Mass operations: firmware updates and bulk provisioning without overloading core services.

- Critical deadlines: seasonal spikes demand stability long before traffic arrives.

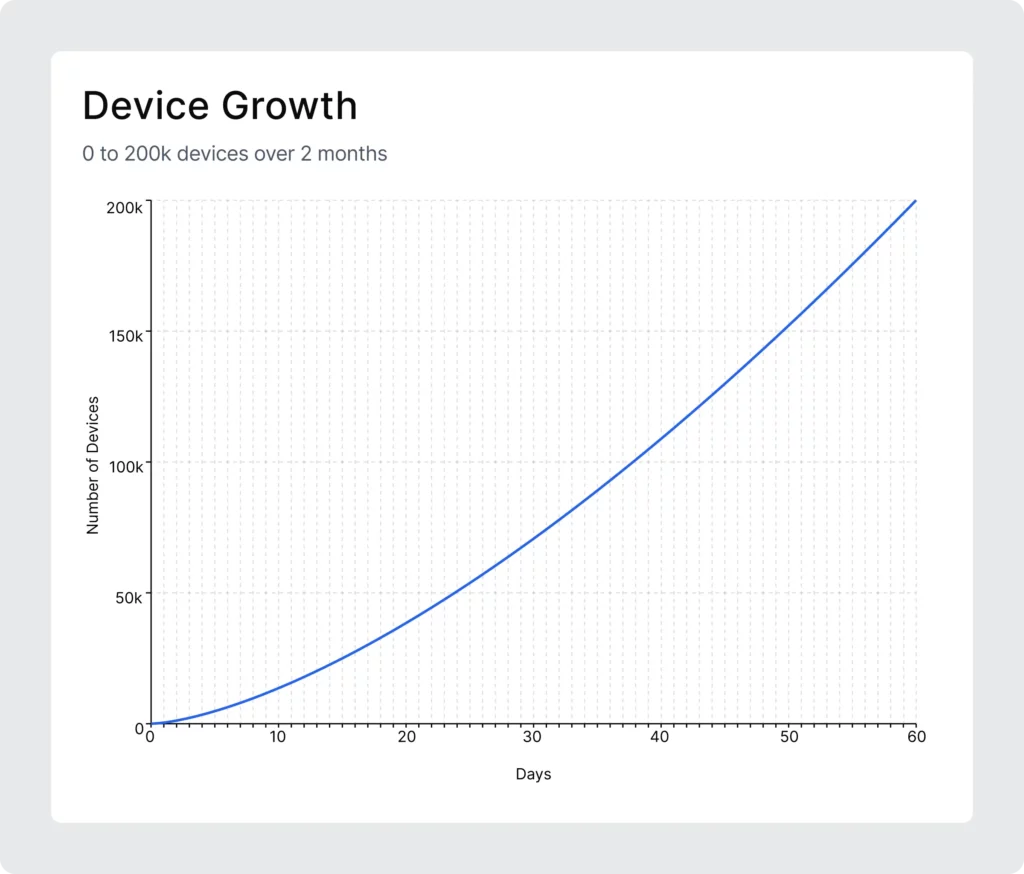

Private Cloud’s Standard tiers have defined limits. A robust Scale plan is designed to handle up to 50,000 devices, but what happens when the target is to maintain 200,000+ connected devices?

Our solution treated scaling as a planned, predictable process, not an emergency. We designed the architecture with future growth in mind, validated it through stress testing on a production-like environment, and identified exact bottlenecks. Based on these results, we applied targeted horizontal scaling and fine-tuned the core services, delivering major architectural improvements with minimal downtime and keeping the platform consistently ahead of rapid growth.

Step 1: Migration to managed infrastructure

Transitioning from self-hosted deployment to Private Cloud is the first strategic step toward stability. Starting with a tailored Launch plan provides immediate operational stabilization with minimal downtime. All Private Cloud deployment plans are designed to operate reliably across diverse use cases.

Step 2: Hitting the ceiling

The Launch plan was sufficient for an initial number of devices. However, as the platform began to scale rapidly, it became clear that projected growth would soon exceed the practical limits of the Private Cloud.

At that stage, we identified a critical gap: our existing test coverage did not fully reflect real-world usage patterns, particularly around device connectivity spikes and concurrent OTA updates. Adding to this challenge was a tight timeline – we knew that within two weeks, the platform would expect a surge in device connections with simultaneous firmware update requests.

As the fleet rapidly approached 50,000 devices, it became clear that a simple plan upgrade would not be enough. The solution must be driven by engineering decisions, not guesswork. By rapidly building the missing test scenarios, validating theoretical issues in a production-like environment, and applying targeted tuning across the stack, our Private Cloud engineering team ensured the platform remained stable and ready for continued growth.

Step 3: The 200k stress test

Reliability should never be theoretical. To prepare for loads far above the standard 50k limit, we developed a specific testing scenario:

- A mirror cluster with production-equivalent settings.

- A storm simulation: 200,000 devices connecting both sequentially and simultaneously, holding sessions, and running OTA firmware updates.

- Deep metrics and logs were captured during the test to see the weak points of the system.

We also provided the client with access to the reports dashboard to ensure full transparency throughout the process and to clearly demonstrate the maturity of the Private Cloud setup. By exposing real-time system metrics under peak load, we enabled the client to directly observe how the platform behaves under stress, verify their business assumptions with factual data, and gain confidence in the engineering decisions being made. This level of visibility reinforced trust and highlighted the operational readiness and robustness of the Private Cloud environment.

Step 4: Surgical engineering and horizontal scaling

Based on stress-test data, we eliminated bottlenecks and tuned the stack to ensure platform stability under high load. Once optimized, the cluster successfully passed the 200k-device stress test and was confirmed ready for production.

Uncontrolled Horizontal scaling can quickly turn into unsustainable infrastructure costs. Achieving efficient scale requires a clear understanding of system load, accurate bottleneck analysis, and deliberate decisions about what to scale.

In this case, the primary bottlenecks were identified as follows:

- Connection limits per load balancer — addressed by allocating additional load balancer replicas.

- OTA update caching layer (Redis/Valkey) — scaled horizontally to handle increased concurrent firmware distribution.

- Memory constraints in MQTT transport services — resolved by increasing memory allocations to support higher connection density.

This targeted approach allowed us to scale only the components under real pressure, ensuring performance gains without inflating operational costs.

Step 5: The invisible deployment and hands-on live support

We applied these architectural changes directly to the live environment without service disruption. Through carefully planned maintenance windows, scaled resources and optimized configurations were rolled out with zero downtime. The core platform components handling device connectivity were effectively rebuilt while fully operational—without forcing device reconnections or causing any service instability.

On launch day, we reinforced the engineering team to provide continuous, real-time monitoring as devices came online. Engineers actively tracked live dashboards, load indicators, and system behavior to ensure any anomalies were detected and addressed before they could impact the client’s operations. This hands-on approach during a critical transition phase ensured full operational control, fast response to edge cases, and confidence that the platform would remain stable under real production load—not just in testing conditions.

The outcome:

- A production-ready environment capable of sustaining uninterrupted operation under peak loads exceeding 200,000 connected devices.

- Proactive monitoring during peak hours to resolve anomalies early.

- Zero incidents: no crashes, no lag, stable operations throughout.

Scalability should never be a barrier. Whether the goal is ten or two hundred thousand devices, ThingsBoard’s Private Cloud provides an engineering partner that stays one step ahead of growth and anticipates your business needs.

After completing all stages of analysis and tuning for this case, we concluded that standardized, unified plans do not always fit every scenario. Strictly following predefined guidelines is not always optimal – complex environments require flexibility and the ability to adapt to unique business and load patterns, as demonstrated in this case. The most effective strategy proved to be a balanced combination of standardization and targeted customization, allowing us to preserve operational consistency while addressing specific scaling challenges efficiently.